Hi! I am a PhD Student at the Institute for Adaptive and Neural Computation and part of the APRIL research lab in Edinburgh, supervised by Dr. Antonio Vergari and Dr. Iain Murray.

The scope of my research is understanding when and how we can design machine learning systems that enable efficient and reliable inference while still being useful for many applications. In particular, my work revolves around several themes such as tensor factorizations, knowledge graphs, neurosymbolic methods, and models enabling tractable probabilistic inference. I am also interested in understanding the expressiveness-efficiency trade-off of probabilistic models, aiming at devising new methods that are expressive while coming with theoretical and practical guarantees. Contact:Useful links: Curriculum Vitae

Publications

For a complete list refer to Semantic Scholar or Google Scholar.

L. Loconte, A. Javaloy, A. Vergari.

arXiv preprint.

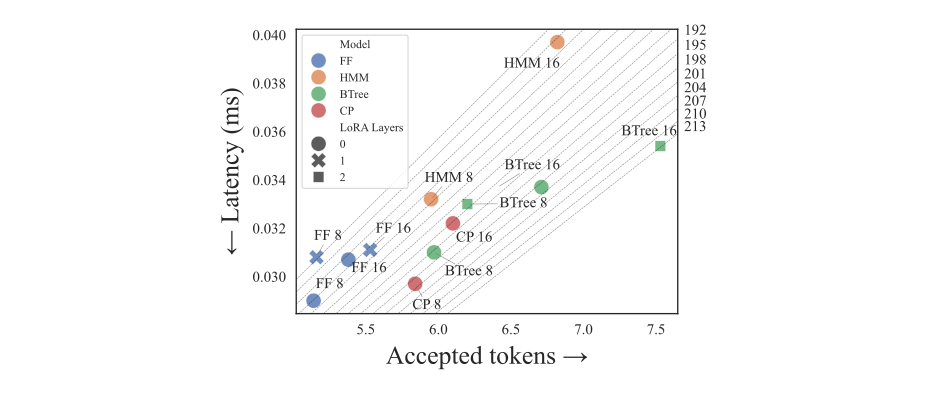

A. Grivas, L. Loconte, E. van Krieken, P. Nawrot, Y. Zhao, E. Wielewski, P. Minervini, E. Ponti, A. Vergari.

arXiv preprint.

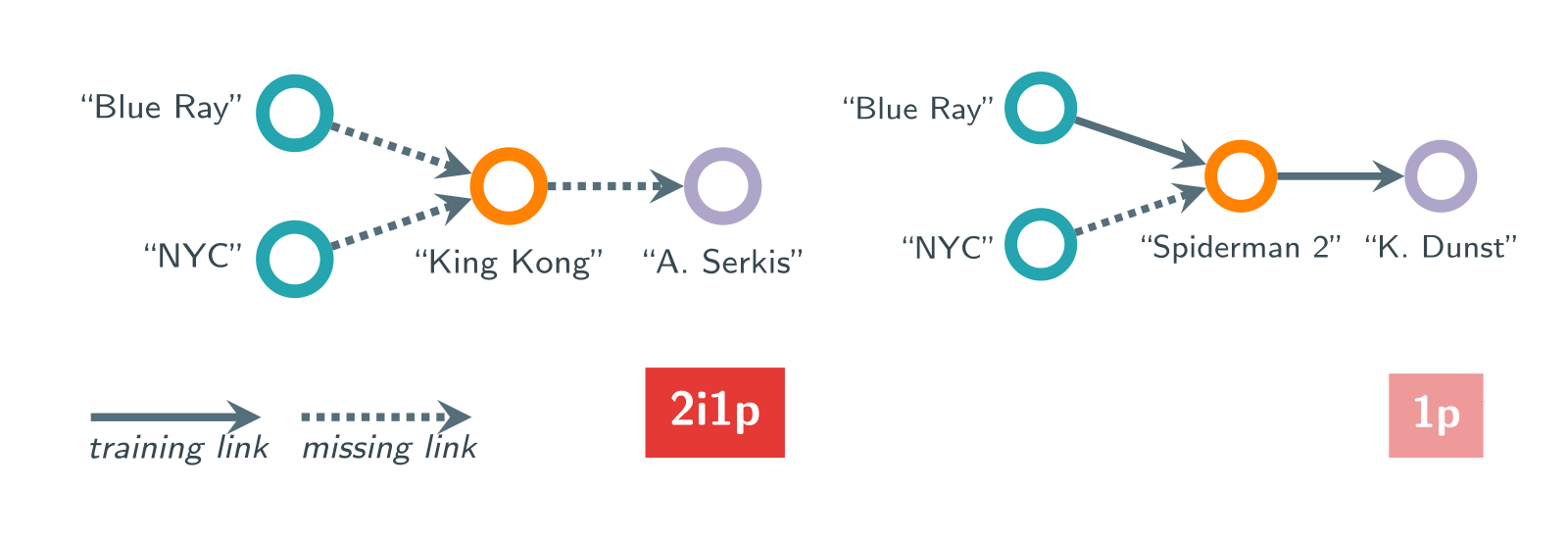

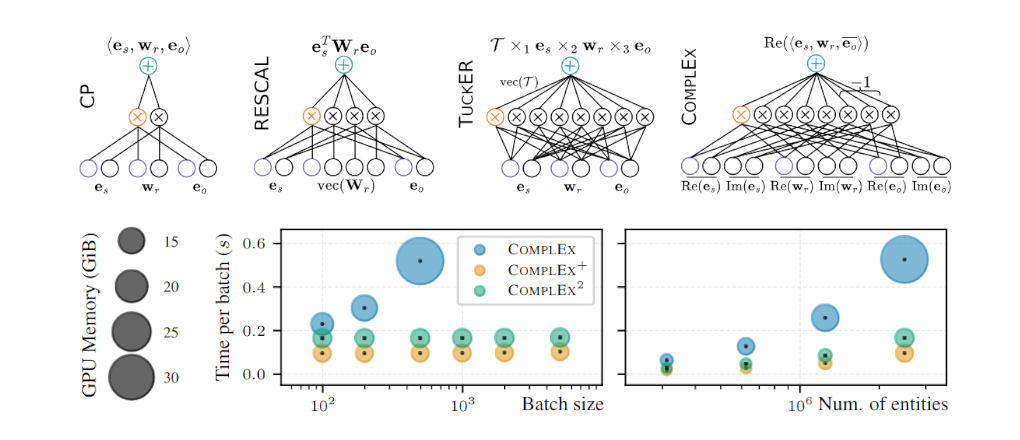

C. Gregucci, B. Xiong, D. Hernández, L. Loconte, P. Minervini, S. Staab, A. Vergari.

ICML 2025. Spotlight (top 2.6%).

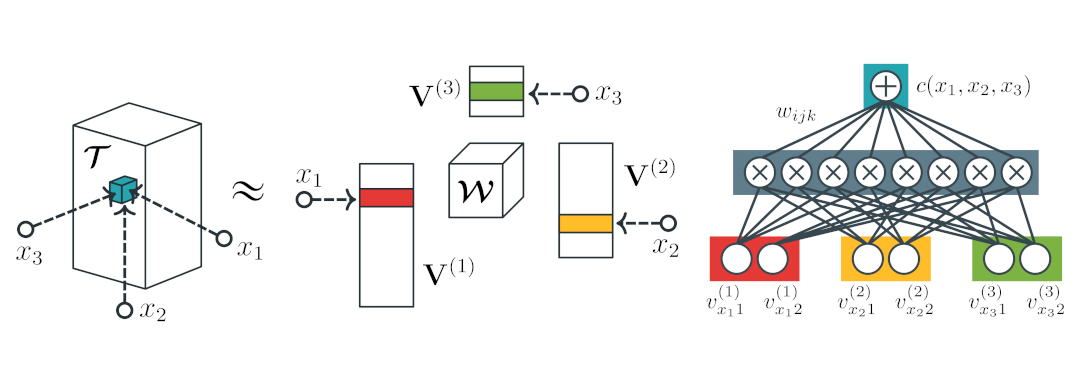

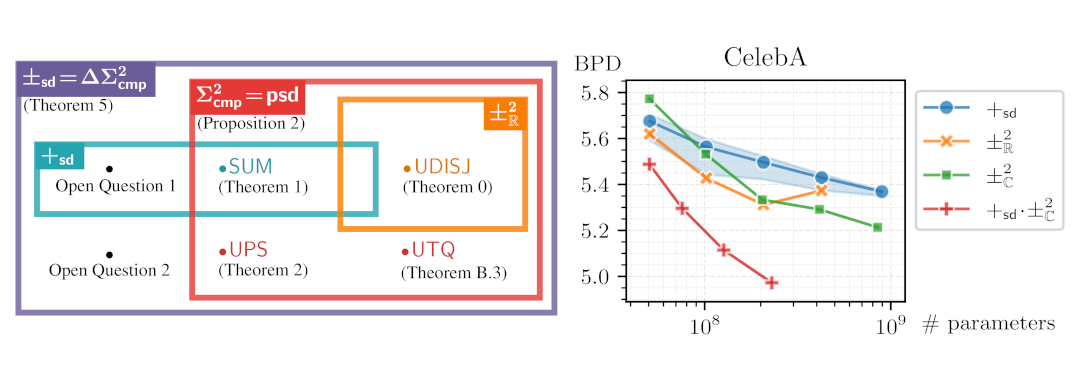

L. Loconte*, A. Mari*, G. Gala*, R. Peharz, C. de Campos, E. Quaeghebeur, G. Vessio, A. Vergari.

TMLR 2025. Featured certification.

L. Loconte, S. Mengel, A. Vergari.

AAAI 2025.

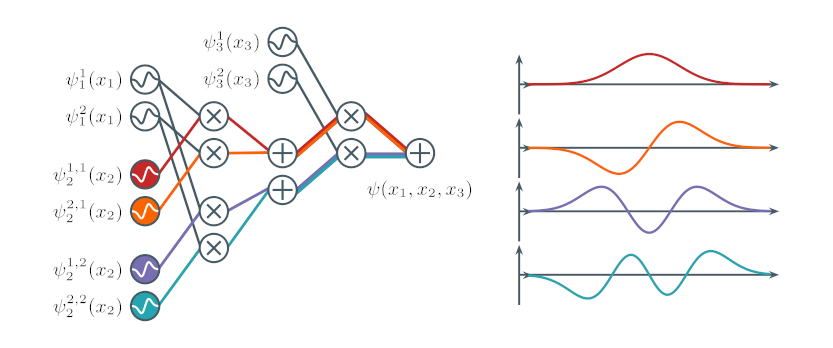

L. Loconte, A. M. Sladek, S. Mengel, M. Trapp, A. Solin, N. Gillis, A. Vergari.

ICLR 2024. Spotlight (top 5%).

L. Loconte, N. Di Mauro, R. Peharz, A. Vergari.

NeurIPS 2023. Oral (top 0.6%).

Software

Here is some software I have contributed to. Check out also my GitHub profile.

cirkit by the APRIL lab (GPL-3.0).

cirkit by the APRIL lab (GPL-3.0).